Currently, TsFiles(including both TsFile and related data files) are supported to be stored in local file system and hadoop distributed file system(HDFS). It is very easy to config the storage file system of TSFile.

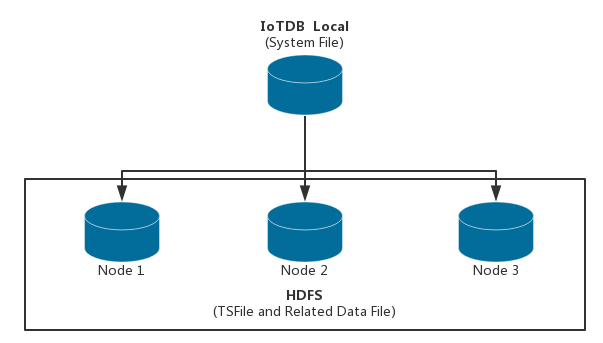

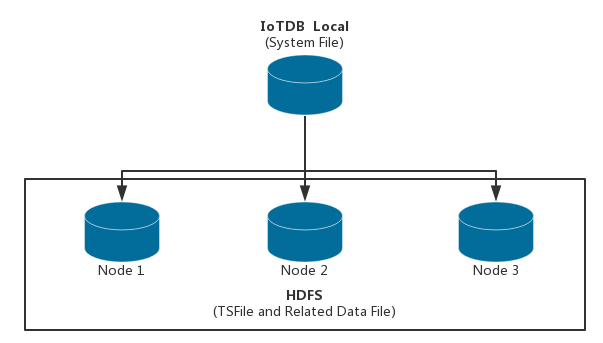

When you config to store TSFile on HDFS, your data files will be in distributed storage. The system architecture is as below:

To store TSFile and related data files in HDFS, here are the steps:

First, download the source release from website or git clone the repository

Build server and Hadoop module by: mvn clean package -pl server,hadoop -am -Dmaven.test.skip=true -P get-jar-with-dependencies

Then, copy the target jar of Hadoop module hadoop-tsfile-X.X.X-jar-with-dependencies.jar into server target lib folder .../server/target/iotdb-server-X.X.X/lib.

Edit user config in iotdb-engine.properties. Related configurations are:

| Name | tsfile_storage_fs |

|---|

| Description | The storage file system of Tsfile and related data files. Currently LOCAL file system and HDFS are supported. |

| Type | String |

| Default | LOCAL |

| Effective | Only allowed to be modified in first start up |

| Name | core_site_path |

|---|

| Description | Absolute file path of core-site.xml if Tsfile and related data files are stored in HDFS. |

| Type | String |

| Default | /etc/hadoop/conf/core-site.xml |

| Effective | After restart system |

| Name | hdfs_site_path |

|---|

| Description | Absolute file path of hdfs-site.xml if Tsfile and related data files are stored in HDFS. |

| Type | String |

| Default | /etc/hadoop/conf/hdfs-site.xml |

| Effective | After restart system |

| Name | hdfs_ip |

|---|

| Description | IP of HDFS if Tsfile and related data files are stored in HDFS. If there are more than one hdfs_ip in configuration, Hadoop HA is used. |

| Type | String |

| Default | localhost |

| Effective | After restart system |

| Name | hdfs_port |

|---|

| Description | Port of HDFS if Tsfile and related data files are stored in HDFS |

| Type | String |

| Default | 9000 |

| Effective | After restart system |

| Name | hdfs_nameservices |

|---|

| Description | Nameservices of HDFS HA if using Hadoop HA |

| Type | String |

| Default | hdfsnamespace |

| Effective | After restart system |

| Name | hdfs_ha_namenodes |

|---|

| Description | Namenodes under DFS nameservices of HDFS HA if using Hadoop HA |

| Type | String |

| Default | nn1,nn2 |

| Effective | After restart system |

- dfs_ha_automatic_failover_enabled

| Name | dfs_ha_automatic_failover_enabled |

|---|

| Description | Whether using automatic failover if using Hadoop HA |

| Type | Boolean |

| Default | true |

| Effective | After restart system |

- dfs_client_failover_proxy_provider

| Name | dfs_client_failover_proxy_provider |

|---|

| Description | Proxy provider if using Hadoop HA and enabling automatic failover |

| Type | String |

| Default | org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider |

| Effective | After restart system |

| Name | hdfs_use_kerberos |

|---|

| Description | Whether use kerberos to authenticate hdfs |

| Type | String |

| Default | false |

| Effective | After restart system |

- kerberos_keytab_file_path

| Name | kerberos_keytab_file_path |

|---|

| Description | Full path of kerberos keytab file |

| Type | String |

| Default | /path |

| Effective | After restart system |

| Name | kerberos_principal |

|---|

| Description | Kerberos pricipal |

| Type | String |

| Default | your principal |

| Effective | After restart system |

Start server, and Tsfile will be stored on HDFS.

To reset storage file system to local, just edit configuration tsfile_storage_fs to LOCAL. In this situation, if data files are already on HDFS, you should either download them to local and move them to your config data file folder (../server/target/iotdb-server-X.X.X/data/data by default), or restart your process and import data to IoTDB.

- What Hadoop version does it support?

A: Both Hadoop 2.x and Hadoop 3.x can be supported.

- When starting the server or trying to create timeseries, I encounter the error below:

ERROR org.apache.iotdb.tsfile.fileSystem.fsFactory.HDFSFactory:62 - Failed to get Hadoop file system. Please check your dependency of Hadoop module.

A: It indicates that you forget to put Hadoop module dependency in IoTDB server. You can solve it by:

- Build Hadoop module:

mvn clean package -pl hadoop -am -Dmaven.test.skip=true -P get-jar-with-dependencies - Copy the target jar of Hadoop module

hadoop-tsfile-X.X.X-jar-with-dependencies.jar into server target lib folder .../server/target/iotdb-server-X.X.X/lib.